P-hacking

Katrina Borthwick - 20th February 2023

P-hacking is a data analysis technique that can be used to present patterns as statistically significant when there is really no underlying effect. It is a misuse of statistics and a misrepresentation, plain and simple, and disappointingly it’s usually perpetrated by scientists.

Pair this with a bias towards reporting on positive results, no matter how tenuous, and add a bit of journalistic flare in the headline, and things can get quite out of control. This is the case even if your study is a deliberate and publicly declared hoax. More on that later…

What is p-hacking?

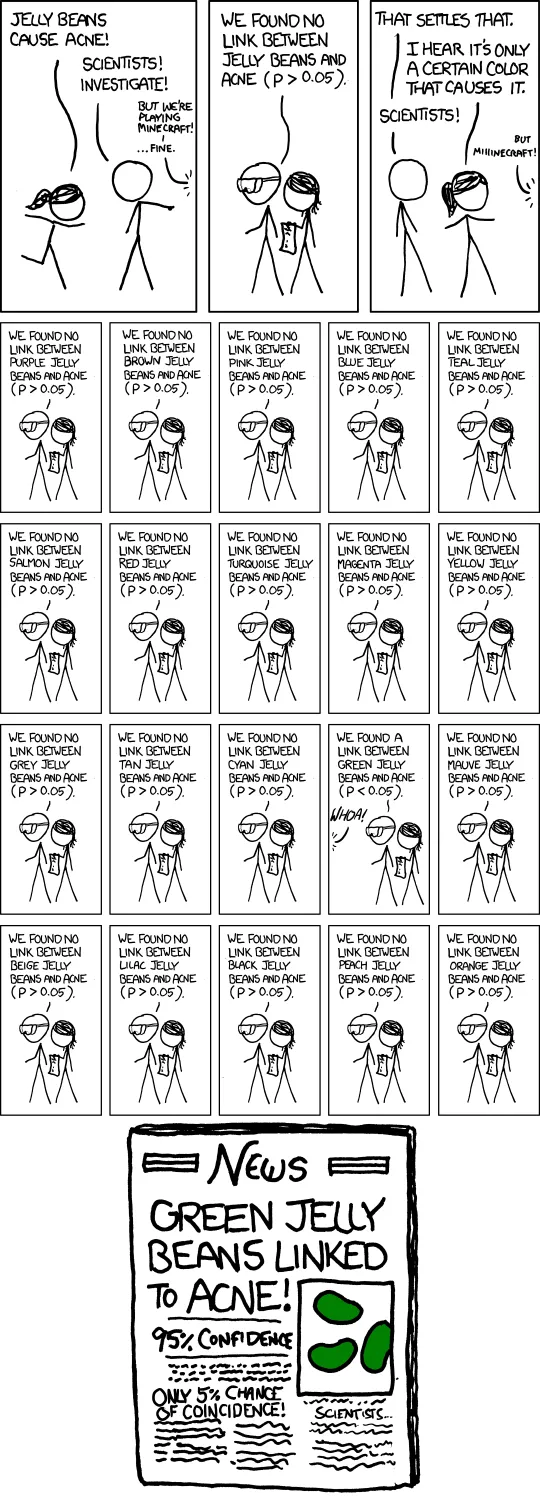

In a nutshell, p-hackers perform a large number of statistical analyses on their data in different ways, with the intention of finding a desirable p-value (usually lower than 0.05). That means there is a less than 5% chance the result has been found by chance. But obviously if you have tried a very large number of permutations, or divided the data up in numerous ways, then there is a high chance you are going to find some weirdness in some parts of the data somewhere. When you find that thing, you can go back and change your method and hypothesis to fit the data, and publish your “significant” result, completely omitting any reference to all the other permutations that didn’t turn up anything.

The following XKCD cartoon absolutely nails it:

If you want to more about the technicalities there is a great video here:

Some real-life examples of p-hacking are:

-

The Cornell researcher Brian Wansink was the director of Cornell’s Food and Brand Lab. His studies are about how our environment shapes how we think about food, and what we end up consuming. For years, he was known as a “world-renowned eating behavior expert.” He once led the USDA committee on dietary guidelines and influenced public policy, including the introduction of smaller packages for portion control. He advised the US Army and Google on how they could encourage healthy eating. Cited more than 20,000 times, he had 15 studies retracted in 2018 and was removed from all teaching and research. Dozens more of his papers were called into question.

-

The chocolate weight loss hoax study conducted by journalist John Bohannon, who explained publicly in a Gizmodo article that this study was deliberately conducted fraudulently as a social experiment. This went about as well as crop circle admissions of guilt did, and the study was spread in many media outlets around 2015, with many people believing the claim that eating a chocolate bar every day would cause them to lose weight. Sigh! The study was published in the Institute of Diet and Health.

-

A study by Bruns and Ioannidis in 2016 dropped the gender control in their study on p-hacking, using as a practical example the evaluation of the effect of malaria prevalence on economic growth between 1960 and 1996. They concluded P-curves were not reliable. Ironically their study methodology actually mistook false positives for confounding variables, and Simonsohn et al responded with a bit of a takedown (link below). It turns out removing gender control also dropped the reported t-value from t=9.29 to t=0.88. This drop meant they were showing a non-causal effect where a causal one was previously recorded (the t-value measures the size of the difference relative to the variation in your sample data – whether it is out of scale with how the data is varying). This is an important finding because t-values are inversely proportional to p-values, meaning higher t-values (t > 2.8) indicate lower p-values. By controlling for gender, they flattened the variation in the sample data, which means the differences showed up more and the t-value is inflated, thus artificially deflating the p-value as well. Sneaky.

How bad is it?

I’m not going to cherry pick here. There was a 2015 study on “The Extent and Consequences of P-Hacking in Science” (link below). They put together a methodology to determine if p-hacking had possibly occurred, and concluded that based on their data that p-hacking is probably common, but its effect seems to be weak relative to the real effect sizes being measured, and it probably does not drastically alter scientific consensuses drawn from meta-analyses.

However, as we have seen, the individual studies themselves can generate a bit of interest, and lead to the public basing their decisions on nonsense. Also, why would we use dodgy data if we can avoid it?

What can researchers do?

The general acceptance of these practices needs to stop. It is a form of scientific misconduct.

Researchers and journals could report all their dependent measures, including the stuff that fails to show a significant result.

The practice of deciding to ‘look for more data’ after getting a non-significant result, or changing the methodology or hypothesis slightly to fit the results, needs to be disclosed or stopped where the research is not clearly stated to be ‘exploratory’ in nature rather than testing a particular hypothesis. To this end, it would be best to require entire methods, including hypotheses, to be pre-specified in advance of the study, and for any subsequent adjustments to these to be disclosed. These should be available to view alongside the research online. Some journals are already moving in this direction, and won’t accept papers where the methods have not been received prior to the study’s commencement.

It is also important that we’re not incentivising the wrong behaviours. That means placing greater value on the quality of research methods and data collection, rather than whether there is a positive finding, when reviewing or assessing research. This is related to publishing bias – where negative results are often not published. Researchers want to get published, and this bias incentivises p-hacking.

Some other practical things researchers can do are measuring only response variables that are known (or predicted) to be important (not green jelly beans), using sufficient sample sizes, and performing data analysis blind wherever possible.

Finally, it would be good to see open access to the raw data. This makes researchers more accountable for marginal results, and allows reanalysis to check robustness. It also increases the likelihood of getting caught if you are being creative with the data, which is a disincentive in itself. Nobody wants to be the next Brian Wansink.

- The Extent and Consequences of P-Hacking in Science - PMC (nih.gov)

- John Bohannon - Wikipedia – the intentionally misleading chocolate study

- P-curve won’t do your laundry, but it will distinguish replicable from non-replicable findings in observational research: Comment on Bruns & Ioannidis (2016) | PLOS ONE